🍜 About the Event

Every semester our student council organizes an event called MSEating. Every student in our department can sign up individually either as a host or as a guest. All hosts have to arrange a dinner for at least 4 other students at their place. The matching of guests and hosts happens anonymously. All guests will receive the address of their respective host via email.

In this project I optimized this distribution since the blackbox-excel-tool used before was not working anymore. I also sent out all emails from the generated csv tables and all worked fine 😊

A more detailed description of the project can be found here: https://github.com/dostuffthatmatters/MSEatingOptimization

Regarding the code quality: Since Covid-19 began, there has not been an event like this anymore. For the next event the very next step is to refactor the whole repository and improve the code quality❗

Address 👉 Coordinates

Right now the coordinates are purely determined by a persons zip code. For every zip code that appears in the given dataset I request the geographic coordinates from the Google Geocoding API.

In order to reduce the number of API calls I have to make, I am storing the results in a local sqlite database (using the SQLAlchemy connector). The importing of new zip codes happens automatically as the coordinates of zip codes are requested: Only for every unknown zip code record a request to the Geocoding API will be triggered.

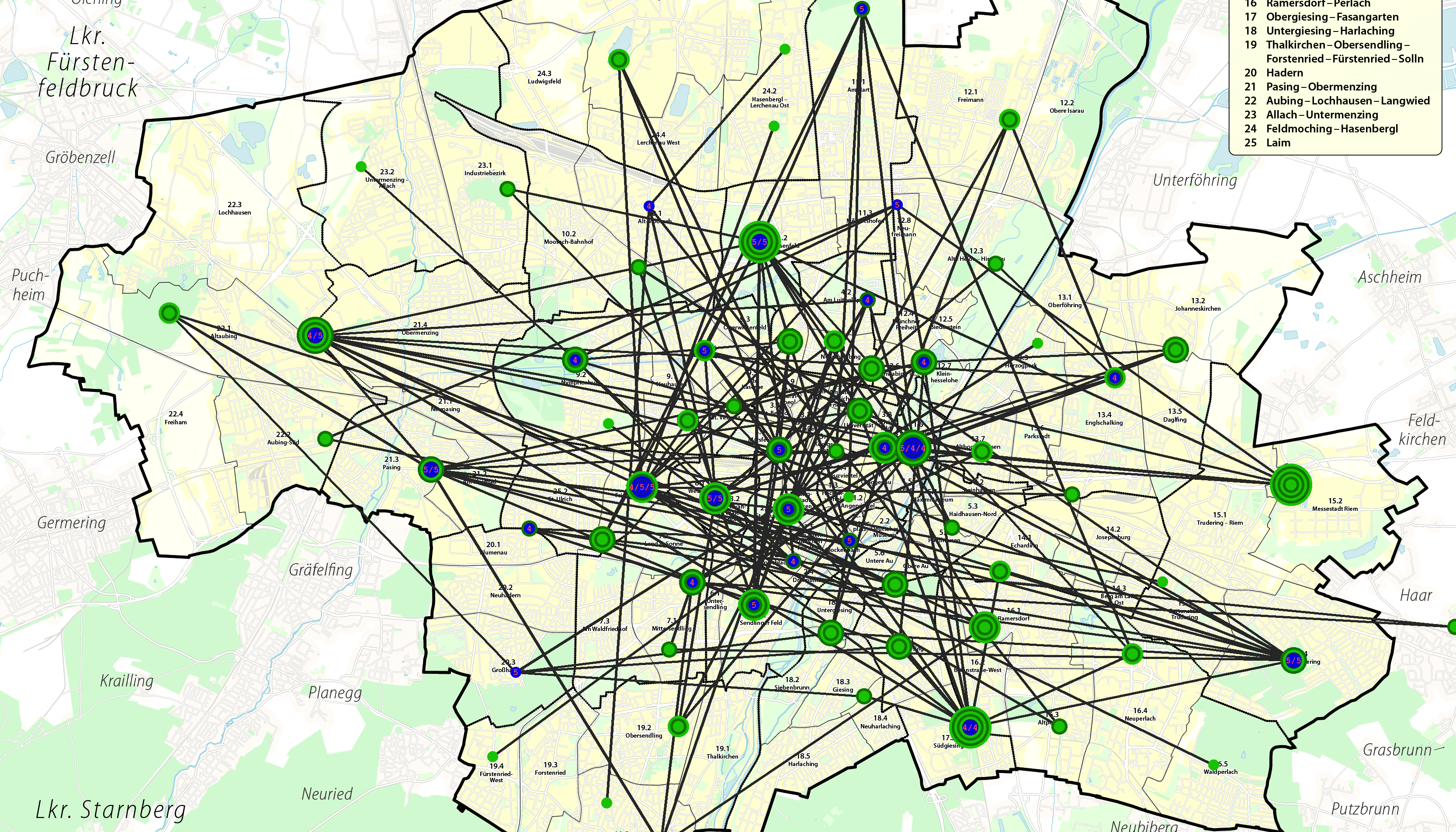

🗺️ Visualizing the Results

In order to better see, what is going on during the optimization, I am using PIL to render all guests, hosts and matchings on a map. The map is a static image and I actually just draw a lot of circles and lines in different colors at specific (x,y) image coordinates - nothing special.

This happens here: https://github.com/dostuffthatmatters/MSEatingOptimization/blob/master/Optimization/visual_link.py

Hosts = blue dots/rings 🔵

Guests = green dots/rings 🟢

In order not to share any private addresses of students, the optimizations you see here are generated for a random distribution of attendees over the munich area.

🏘️ About the Optimization

When a few hosts are located right next to each other (here: max. 500 meters apart) then it makes sense to combine these hosts in order to save computing power and get a better optimization in the same amount of time. I call them "Host Hubs".

Every guest chooses its favorite host hub (the closest one). If that host hub has more requesting guests than spots to fill, then the closest guests will be preferred. After the assignment of guests all unmatched guests will prefer their next-closest host hub with free spots. This iteration happens until there are either no unmatched guests or no host hubs without free spots left.

With a lot of these guest-pairs, if they were to switch hosts, the overall mean travel distance would be less than before the switch.

So I applied a brute-force-iteration after the initial proposed matching. The algorithm iterates over every guest with a specifically long travel distance and tries to find a switch-partner to minimize the mean travel distance.

The algorithm iterates over all badly-routed guests at most 100 times or until no improvement happened in one full iteration. Most times the optimization finishes after less than 15 iterations (with 400 attendees).

However, the thing that is more important than mean linear travel distance is the mean squared travel distance. A few very long routes are worse - and more unfair - than a lot of slightly longer routes.

You can read more about the different optimization approaches here: https://github.com/dostuffthatmatters/MSEatingOptimization/tree/master/Optimization/Optimizer

This is not the optimal solution since I did not apply brute-forcing to any pair of two matched guests. However I think that this optimization procedure would be rather unstable - without further optimization - and would not be a big improvement.

💡 Future development

Maybe I can use a public transport travel duration instead of geographical distance. The optimization will still be exactly the same.

I would have to think of some methods for reducing the number of API calls I'd have to make. Without optimization - 320 guests, 80 hosts: 320 * 80 public transport API requests